Microservices vs Monoliths: A False Dichotomy

There is this perennial debate in tech around microservices and monoliths and although there are a host of other articles discussing it I fancied recording my thoughts in order to have something I can link to in future.

Firstly I think framing this as a dichotomy is the wrong approach. Ultimately we have a bunch of code and we need to decide what should be deployed together vs separately, in other words we need to find the right size for our services. Even describing it as the 'right size' belies the fact that you can usually make a number of different architectures work for a given problem and they will all require hard work and diligence to make them effective.

Note that I'm being slightly loose with my use of 'service' here in light of Function as a Service (FaaS) paradigms, but suffice to say that we're operating on a spectrum with every function deployed separately on one side and every function deployed together on the other.

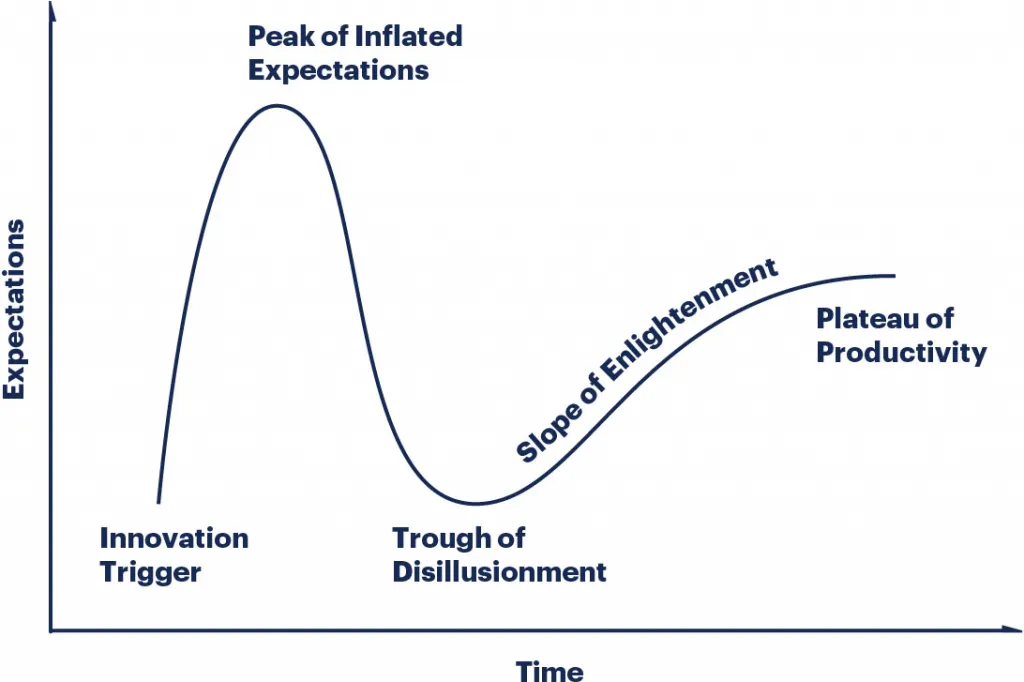

I think the 2010s were characterised by many people being too quick to split code due to the hype behind microservices (and then serverless). In the last few years it feels like the discourse has shifted though and hopefully we have now reached the 'plateau of productivity' where we can have more nuanced discussions about the best architecture for the job.

There are no silver bullets when it comes to software architecture and each option will come with a different set of trade-offs. The most important thing in my experience is to go into an implementation with your eyes open as to what those trade-offs are, so that you can make smart technical and product decisions to work around the downsides.

To that end I'll now discuss some benefits for grouping code into the same deployment unit vs the benefits of separating it.

Grouping Code

I tend to value simplicity very highly and I think most of the benefits of fewer deployment units fall under that banner. I'll break some of them down into headings below.

Setup Required

Firstly you'll need to do significantly less upfront work for a given piece of functionality since spinning up a service may require:

- Creating a CI pipeline

- Creating deployment manifests

- CD integration work

- Logging & monitoring setup

- Creating new SLAs

- Spinning up DBs/caches/topics/queues

- Linking up DBs to some sort of data lake to allow BI and product analytics

- Configuring security scanning

- Integrating with auth

That sounds like a lot of work and I've probably missed stuff! Most orgs with a large number of services will have solved this by building tooling to make creating compliant services a lot easier, but you need to figure out what's realistic for your company to support based on its size and engineering culture.

Testing

The next thing 'monolithic' deployments make simpler is end-to-end local testing of functionality.

Testing functionality which crosses service boundaries requires setting up something like a docker-compose file or a set of run scripts. Inevitably some of those tests will be flaky and require extra maintenance effort because you end up sending a request and waiting on a certain timeout for a response. All of that is much easier if you can just run the code in a blob.

Reasoning

In general splitting services means you have created a distributed system (hopefully you haven't created the dreaded distributed monolith!) and this is harder to reason about than a centralised system.

'Hard to reason about' is quite a fluffy phrase which gets thrown around lots in engineering discussions, what this might look like in practise is:

- More unexpected bugs cropping up due to people having inadequate mental models of the system

- Questions from stakeholders taking more time to answer, due to needing to go away and study/think about the system

- Estimates for new features being less accurate

Codebase Navigation

A related benefit is that navigating the codebase is generally easier when

dealing with a single deployment unit as code paths can be followed via IDE

features such as ctrl + click to navigate to a function definition.

In contrast code paths which cross service boundaries require tracing some sort of network request, which is much more time consuming as it typically involves a bunch of string searching.

This adds a hidden tax for every feature you work on, as well as contributing to the delays listed above for reasoning about the code.

Latency

There are a couple of factors which mean a given request will often have lower latency if it's served by a single service compared to a distributed system.

To illustrate let's show two possible toy designs for how we might build out a system where a UI needs access to data about a user and the organisation that user is a part of.

Single service design:

Multi-service design:

We can see that in this simplistic example that we've added the elapsed time for 1 or 2 extra network requests to the end to end flow (depending on if they're sequential or parallel) since the single service design can fetch the data for both tables from the DB in a single request with a table join.

Depending on how things have been decomposed into services the number of calls can grow quickly for more complex cases and this can have a significant impact on page load times (and hence things like conversion rates).

Splitting Code

Monoliths aren't all roses though, let's dive into some of the benefits of splitting up code.

Independent Scaling

A major benefit for splitting code up is the ability to scale things independently. You might need independent scaling for a number of reasons:

- If some code has different compute requirements then you may want to run it on different hardware. For instance if you're adding a feature that requires doing some video encoding or rendering work then you may need to run it on a node with a GPU, but you don't want to add this cost overhead to the nodes running your existing software. This can be characterised as needing vertical scaling.

- If a feature has a very different usage pattern then you may want to run it with a different number of instances (and allow those instances to scale up and down differently), an example of this would be allowing users to kick off some sort of batch processing job which you don't want to interfere with the performance of an existing webapp. In other words this is when you need horizontal scaling.

This is by far the strongest reason to split out a service in my experience.

Language Choice

A commonly touted reason for splitting things out is the ability to access different programming languages more easily, however I have mixed feelings on this one.

In some situations this can be pretty reasonable, maybe you have a part of your platform which is extremely latency sensitive such as real-time voice processing. In such a case your Node.js backend likely won't cut it and you need to drop down to a lower level language such as C++ or Rust.

I think the key part here is having concrete reasons for why you need a different language that don't just amount to 'resume driven development'. I've worked at places which didn't restrict language use to a reasonable subset soon enough and it exacerbates a number of organisational problems by limiting people's ability to contribute to different areas.

It should be noted that it's not strictly necessary to introduce a service boundary to get the pros or cons of using different programming languages. Just look at the many foundational Python libraries written in C or Fortran for a good example of this (or shudder at the random R scripts run as subprocesses I've seen as a bad example). Ultimately this is a classic case of needing to find the right balance between consistency and freedom that underly many decisions at an organisational level.

Enforced Module Boundaries

Enforcing clean module boundaries is vital for the ongoing maintainability of a software system, otherwise you end up with a 'ball of mud' which is harder to reason about and make changes to.

It is typically much easier to enforce boundaries between different bits of code when they're deployed separately. This is true both technically because the code might be in different repositories and/or the build system may separate them, but also organisationally it seems to be more effective to assign ownership of a particular service to a team rather than assigning ownership of modules (although I would love to see examples of this not being the case).

In a mono-repo context the distinction between separate services vs modules in a monolith becomes less clear since the potential for cross module imports can sneak back in. I have used custom eslint rules to restrict this in the past, but it can be awkward to set up. This is an area where I'd love to see some better tooling and conventions in the Typescript/ Javascript ecosystem.

The other important point to talk about here is data separation. I'm writing this with the assumption that separate services will always have separate DBs (or separate DB schemas at least). Whilst there are exceptions to any rule, shared DB access between multiple services is a huge red flag for me (this comes back to not creating a distributed monolith which combines the worst of both worlds).

In this era of easy to set up auto-scaling my experience is that most webapps will start to hit scaling issues in the DB first. Splitting your services gives you implicit sharding by functional area which can take you a surprisingly long way in terms of performance without needing to do anything too fancy, as well as significantly reducing the blast radius when you do start to have issues.

I'm yet to experience a team with really effective performance monitoring and proactive capacity planning in place but I would love to learn more about setting this up. So far my experience has been that shit hits the fan when the DB grinds to a halt, then you have to figure out a way to scale things up in a hurry! Sadly I suspect this is fairly typical for smaller companies so it's significantly less stressful to have a degraded experience in one specific part of your app instead of the all important DB going down and tanking everything.

Astute readers might notice that this is the corollary of the point I talked about above with regards to latency, since what was a JOIN now requires an extra network request. It's trade-offs all the way down!

Principle of Least Privilege

Splitting services out to reduce blast radius also applies on the security side since it's generally easier and more effective to apply the principle of least privilege to separate services.

Let's illustrate this point with another silly example: imagine you are building

a REST API with a number of endpoints, each of which interacts with an endpoint

from a third party API. You've been tasked with adding a deleteAllTheThings

endpoint which needs to call your supplier's irrevocablyDeleteEverything

endpoint (if this is ringing any bells, you might want to take another look at

your vendor selection process!).

Your API will have some credentials which it uses to generate an access token

for authenticating with the third party API. If you add the rights to call

irrevocablyDeleteEverything to your existing client then suddenly the risk

associated with accidentally leaking those credentials has gone way up.

If instead the new functionality was added as a separate service using a new client with only those permission then we don't need to audit the entire existing codebase to be confident that secrets are being handled properly.

Conclusion

Hopefully you now have a better idea of the trade offs at play when you need to make a decision about service boundaries. As you can see there are lots of factors involved and it can be a bit overwhelming trying to predict what will work best for your future needs.

My best advice for system design overall is to follow these two heuristics:

- Keep things as simple as reasonably possible

- Build things in a way that makes it easy to change your mind later

I'd like to write up more of a guide for system design in the real world at some point, so let me know if that's something you'd be interested in!